C++ Memory Arenas and Their Implications

Memory arenas were introduced to the default C++ memory allocator under Linux in order to improve the performance of memory intensive multi-threaded applications.

Before its introduction, every memory allocation had to be synchronised, which resulted in memory allocation being a major performance bottleneck. Memory arenas address this problem by introducing multiple memory pools, which are used to serve memory allocations by multiple threads concurrently.

This is a well written blog article about how memory arenas are implemented.

In contrast to the referenced article, this blog post focuses on how this concept can affect the observed memory behavior. This is especially interesting since it gets easily misinterpreted as a memory leak.

This article is based on a lightning talk which was presented at a meeting of the C++ user group in Munich. This article and the talk show how memory arenas can lead to a memory leak like behavior. For both, the following program is used:

https://github.com/celonis-se/memory-arena-example/blob/master/main.cpp

The program starts several workers in separate threads. There are two types of workers. The 30 MB worker and the 100 MB worker. The 30 MB worker allocates 30 MB of data and initializes it. The data is released after 100 milliseconds. It does that 10 times in a row. The 100MB worker does the same, but allocates 100 MB blocks. Which worker type and how many of them are started can be defined via a command line parameter.

After all workers are done with their work, the program waits for user input. It gives the user three options: the first one ends the program, the second executes malloc_trim(0), and the third prints the memory arena statistics by calling malloc_stats().

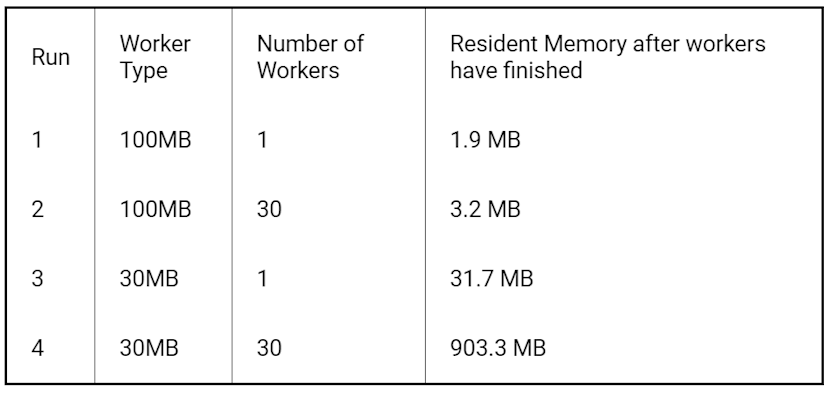

The following experiments were executed on a Ubuntu 16.04 with an 8 core (4 physical) CPU. The table below shows the resident memory of the process, for various worker types and worker numbers combinations, after all workers have finished their work and released all the data.

The 900 MB of residential memory after run number 4 is worth investigating. A natural conclusion would be a memory leak, which can be excluded by investigating the program with tools like Valgrind. The actual reason can be understood by taking a look at the arena statistics, which can be retrieved by calling malloc_stats().

For run 1, malloc_stats() generates for example the following output:

Arena 0:

system bytes = 204800

in use bytes = 74096

Arena 1:

system bytes = 135168

in use bytes = 3264

Total (incl. mmap):

system bytes = 339968

in use bytes = 77360

max mmap regions = 1

max mmap bytes = 104861696

Arena Output Test Run Results

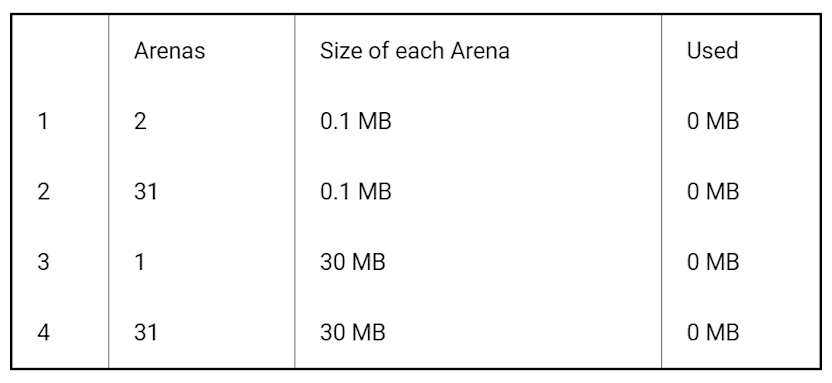

The output shows how many memory arenas exist, their size and how much is actually used. The results for each test run are collected in this table:

The tests indicate that for each worker a separate memory arena is created. Furthermore, it seems that a memory arena doesn’t make freed memory available to the OS, even though it is completely empty.

The difference in the arena sizes between the 100MB workers and the 30MB workers can be explained by the fact that allocations of larger memory blocks are treated differently than smaller ones. While smaller memory blocks are allocated in a memory arena, larger memory blocks are allocated via mmap to avoid fragmentation. For more information on that see here.

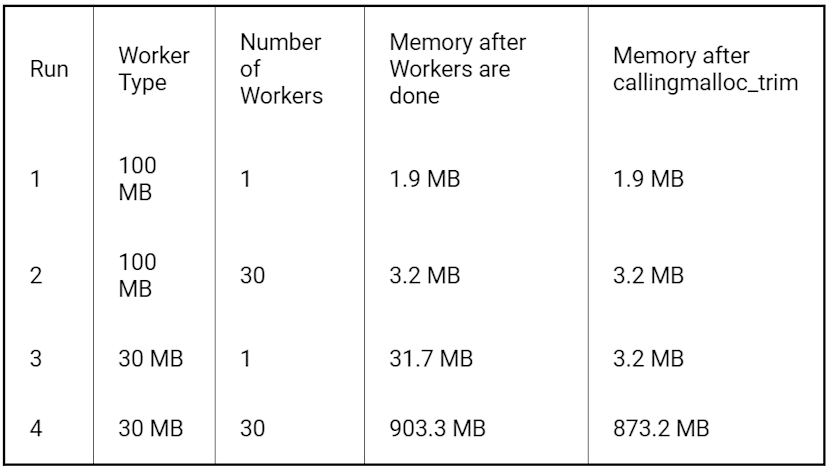

Concerning the problem that the memory is not returned to the operating system, it was recommended in a post related to memory management in multi-threaded environments on stackoverflow to use malloc_trim.

The following table shows the arena states after applying malloc_trim, which according to its documentation attempts to release free memory at the top of the heap:

As tests show, only 30 MB, the size of one memory arena, gets released. Further investigation revealed that malloc_trim only returns the unused bytes of the main arena back to the OS.

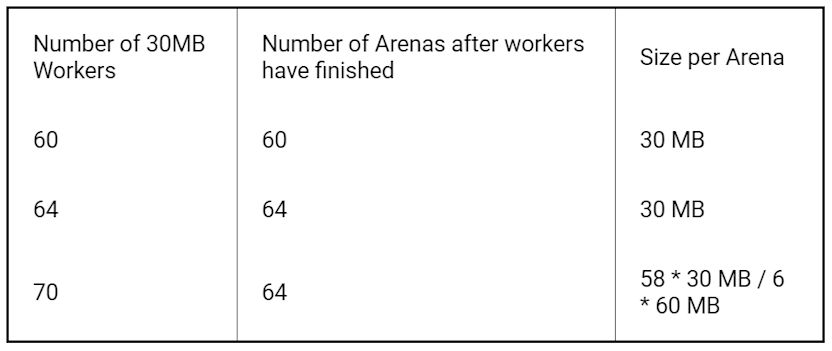

To the best of our knowledge, there is currently no way to return the memory to the OS which can cause a significant memory overhead. To limit the overhead, the number of arenas is limited to 8 times the number of cores (including hyper-threads) by default. On the 8 core machines used here, this leads to a limit of 64 arenas.

If more than 64 workers are active, no additional memory arenas are created but the existing ones will maybe become larger.

Conclusion

Memory arenas can significantly improve the performance but also can cause significant memory overhead, especially in long-running, multi-threaded programs.

This behavior can be easily mistaken as memory leaks. If in doubt whether there is a memory leak or the memory is kept allocated by a memory arena check the arena statistics by calling malloc_stats().